研究主題:協助全科醫師識別轉診上瞼下垂的卓越人工智能模型

An Outperforming Artificial Intelligence Model to Identify Referable Blepharoptosis for General Practitioners

撰寫:學生王馨

上瞼下垂,指上眼瞼下垂或下移。此症狀會阻礙視軸並影響視力,且可能為嚴重醫學疾病的先兆,例如眼肌無力、第三顱神經麻痺或霍納綜合徵等。對於全科醫生來說,為了做出轉診決策並在必要時進行檢查,準確診斷上瞼下垂非常重要。而對非眼科醫生而言,由於測量眼瞼標誌的重複性和再現性低以及學習曲線的影響,準確識別上瞼下垂相當具有挑戰性。由此,傅楸善教授及其團隊開發了一種能夠自動準確識別可參考上瞼下垂的人工智能模型,作為協助全科醫生診斷上瞼下垂的自動化工具。

在這項研究中,傅教授團隊使用VGG-16作為基礎結構來訓練AI模型,其目的為準確診斷上瞼下垂。VGG-160s架構的最後數層被替換為全局最大池化層,然後是全連接層和用於二元分類問題的sigmoid函數。本模型亦通過導入在ImageNet上訓練的權重來執行遷移學習,並應用數據增強以防止過度擬合。另一方面,急診醫學、神經內科和家庭醫學各一名的三名專家則代表非眼科醫師組接受測試。對於AI模型和醫師組,本研究提供了雙方相同的測試集,包括25個健康眼瞼和25個下垂眼瞼,以區分下垂眼瞼和健康眼瞼。該測試顯示,AI模型的準確率為90%,靈敏度為92%,特異性為88%;而醫師組的準確率為77.33%,靈敏度為72%,特異性為82.67%。這些結果表明,人工智能輔助診斷工具可以準確檢測上瞼下垂,並在必要時及時轉診進行眼科評估。

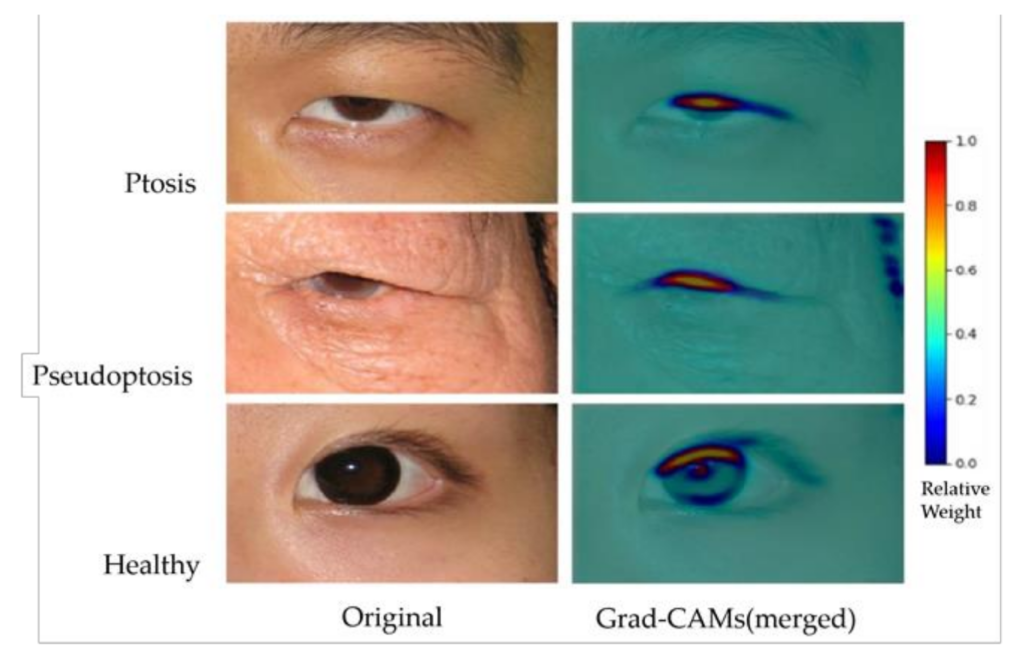

此外,為了可視化合理的AI預測,Grad-CAM還有助於識別圖像中的數據集偏差。例如,眼部周圍的術前標記或眼瞼術後縫合可能會為AI模型提供誤導性線索,而不是眼瞼下垂的眼瞼信息。Grad-CAM 的結果(如附圖)顯示了上眼瞼邊緣和中央角膜光反射之間的熱點區域(重量為 0.5-1.0),這在臨床上與 MRD-1 概念兼容。背景中的冷區(權重為 0-0.2)成功排除了數據集偏差,提供了更強的忠實度。隨著未來數據使用量的增加和多樣化,可以期待更精確的結果來理解人工智能的預測。

Blepharoptosis, also known as ptosis, is the drooping or inferior displacement of the upper eyelid. Ptosis can obstruct the visual axis and affect vision. It can be a presenting sign of a severe medical disorder, such as ocular myasthenia [1], third cranial nerve palsy [2], or Horner syndrome [3]. It is essential for general practitioners to accurately diagnose ptosis to assist in decision-making for referral and work up when necessary.

With low repeatability and reproducibility in measuring eyelid landmarks and the effect of learning curves [5,6], accurately recognizing ptosis is challenging for non-ophthalmologists. As a result, Professor Chiou-Shann Fuh and his team developed an artificial intelligence model that automatically identifies referable blepharoptosis as an automated tool to assist general practitioners in diagnosing ptosis.

In this study, Fuh’s team used VGG-16 as the base structure to train an AI model whose training purpose is to diagnose ptosis accurately. The last few layers of VGG-160s architecture were replaced with a global max pooling layer followed by fully connected layers and a sigmoid function for the binary classification problem. This model also performs transfer learning by importing weights trained on ImageNet and applying data augmentation to prevent overfitting. On the other hand, three specialists, one in emergency medicine, neurology, and one in family medicine, were tested on behalf of the non-ophthalmologist group. For the AI model and physician group, this study provided the same test set for both sides, including 25 healthy eyelids and 25 ptotic eyelids, to distinguish ptotic eyelids from healthy eyelids. The test showed that the accuracy of the AI model was 90%, with a sensitivity of 92% and a specificity of 88%; the accuracy of the physician group model was 77.33%, with a sensitivity of 72% and a specificity of 82.67%. These results suggest that an AI-aided diagnostic tool can accurately detect blepharoptosis and prompt referral for ophthalmic evaluation when necessary.

In addition, to visualize reasonable AI predictions, Grad-CAM also helped identify image dataset biases. For example, a preoperative marking around the eye or a postoperative suture on the eyelid may provide misleading clues to the AI model rather than eyelid information for blepharoptosis. The results of Grad-CAM (as shown in the attached figure) demonstrated a hotspot area (0.5–1.0 in weights) between the upper eyelid margin and central corneal light reflex, which is clinically compatible with the MRD- 1 concept. The background cold zone (0–0.2 in weights) successfully excluded dataset biases, providing more vital faithfulness. With more extensive and diverse data utilization in the future, more precise results can be expected to understand AI predictions.